The Challenges of GPT-3

GPT-3 is an impressive language model that has captured the attention of many researchers, developers, and businesses. Despite its impressive capabilities, GPT-3 presents some significant challenges that must be addressed.

One of the main challenges of GPT-3 is its reliance on large amounts of data. The model requires extensive training and access to vast databases to produce accurate results.

Another challenge is the potential for bias. Since the model is trained on text data from the internet, it may learn and propagate biases that exist in the data.

A further challenge is the difficulty of interpreting GPT-3’s outputs. Some researchers have questioned whether the model generates outputs that are genuinely original or merely a combination of rephrased text from its training data.

These challenges highlight the need for more research in the field of natural language processing to address the limitations of GPT-3 and create more robust and reliable models for human use.

How Does GPT-3 Work

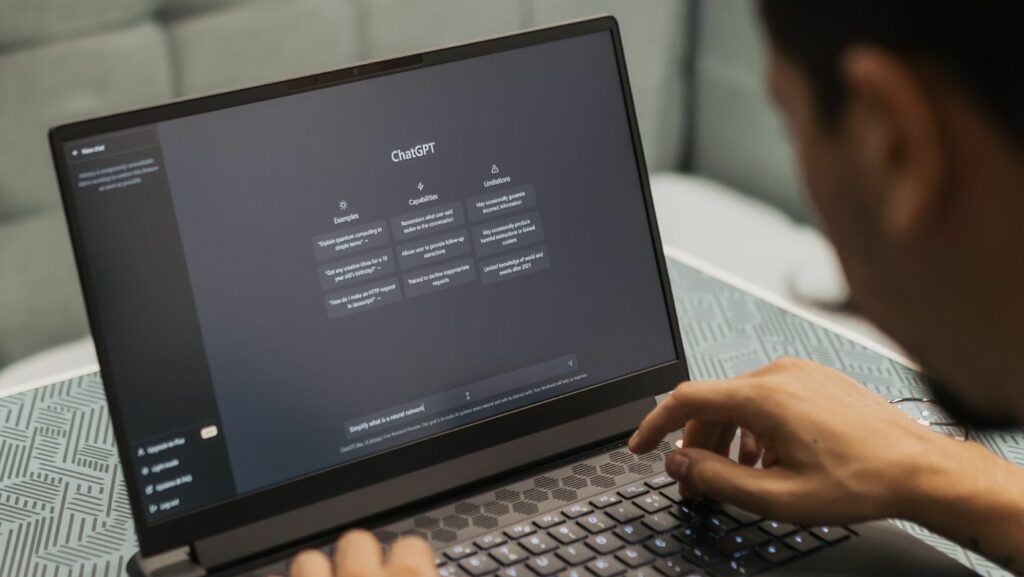

GPT-3 is a language model created by OpenAI that is based on Artificial Intelligence (AI). It is a powerful tool that can be used to generate natural language with minimal input. GPT-3 is able to understand natural language and generate complex text, summaries, and stories.

But there are still some challenges with GPT-3 that need to be addressed. In this article, we will discuss the capabilities, the challenges, and how GPT-3 works.

Explanation of GPT-3 and How it Works

GPT-3, or Generative Pre-trained Transformer 3, is a state-of-the-art language prediction model developed by OpenAI. It uses deep learning algorithms to generate human-like text based on the input given to it, making it capable of emulating various writing styles and responding to prompts for tasks like translation, summarization, and more.

GPT-3 is trained on an extensive dataset of text, allowing it to generate more accurate and coherent language than previous language models. However, the system is not without its challenges, such as the potential for bias in the data it is trained on and the need for significant computational power to run effectively.

Despite these challenges, GPT-3 has strong potential for various applications, including natural language processing, content generation, and more. Its capabilities are impressive, and the potential for its application is vast.

Benefits of Using GPT-3

GPT-3 is a revolutionary technology that has changed the way we process information and interact with machines. Its benefits are numerous, making it a popular choice for developers, researchers, and businesses worldwide.

Here are some of the benefits of using GPT-3:

1. Language processing: GPT-3 is capable of understanding natural language and producing human-like responses, improving the accuracy and effectiveness of communication between humans and machines.

2. Time-saving: With GPT-3, developers and researchers can quickly generate high-quality texts, reducing the time and effort needed to complete a project.

3. Multilingual: GPT-3 can read and write in multiple languages, giving businesses an edge in the global market.

4. Cost-effective: GPT-3 can automate tasks that would require multiple employees, making it a cost-effective solution for businesses.

Despite its benefits, GPT-3 poses a few challenges, such as ethical concerns, bias, and data privacy issues. Therefore, it is crucial to use GPT-3 with caution and accountability.

Limitations of GPT-3

GPT-3 has taken the AI world by storm, with its remarkable capabilities of generating human-like language seamlessly. However, even GPT-3 has its limitations which restrict it from being a perfect language generator. Here are a few challenges of GPT-3 that one should be aware of.

Lack of Common Sense – GPT-3 has a vast pool of information to generate text, but it lacks basic common sense, which makes it unable to differentiate between relevant and irrelevant information.

Over-dependence on Training Data – GPT-3 needs massive amounts of data to generate fluent language. Hence, it can lead to biased outcomes if the training data is not diverse.

Inability to Grasp Context – GPT-3 can generate language but lacks the comprehension of context, making it prone to ambiguity and errors.

Expensive Resource Consumption – GPT-3 requires a sizeable cloud infrastructure to cater to its storage and processing needs, making it an expensive resource to work with.

However, even with its limitations, GPT-3 has undoubtedly made a considerable contribution to the field of AI and has opened the doors for further developments in language generation.

Challenges of Implementing GPT-3

GPT-3 (Generative Pre-trained Transformer 3) is a powerful machine learning algorithm that has revolutionized the way artificial intelligence can be applied to a wide range of tasks. With its ability to generate natural language text, GPT-3 has presented new opportunities for researchers and developers who are looking to develop machine learning applications.

However, implementing GPT-3 comes with its own set of challenges. In this article, we will discuss the challenges associated with implementing GPT-3.

Integration with Existing Systems

GPT-3 is a powerful tool for natural language processing, but its integration with existing systems presents several challenges. One of the main challenges is compatibility with different programming languages and platforms.

Additionally, GPT-3’s large size and computational requirements make it difficult to integrate with smaller systems or those with limited resources.

Another challenge is data privacy and security, as GPT-3 may require access to sensitive information to function effectively.

Finally, GPT-3’s potential biases and limitations in understanding certain cultural or linguistic nuances can also pose challenges for integrating it into existing systems while maintaining fairness and accuracy.

To address these challenges, careful consideration and planning are necessary before implementing GPT-3 into an existing system. Working with experienced developers and ensuring data privacy and security measures are in place can help mitigate these challenges. Additionally, ongoing monitoring and testing can help identify and address any biases or limitations in GPT-3’s capabilities.

Cost Considerations

GPT-3 has unprecedented abilities in terms of natural language processing, however, implementing it comes with certain challenges, with cost considerations being a significant one.

GPT-3’s cost has steered away many small-scale enterprises from implementing it into their workflow. As it currently stands, GPT-3’s subscription fee varies depending on the level of access desired. A comprehensive license that provides full access may cost upwards of tens of thousands of dollars annually, while smaller-scale licenses are available with relatively lower capabilities.

However, the cost factor needs to be weighed against the undeniable benefits of GPT-3’s cutting edge language processing abilities. For larger organizations, the results that a tool like GPT-3 can provide are worth the investment. It is key for businesses to evaluate their usage needs and plan a budget accordingly.

Pro Tip: Many third-party tools offer access to GPT-3 at relatively affordable prices. So, before shelling out thousands, explore the options available to you.

Data Requirements and Availability

One of the major challenges of implementing GPT-3 technology is the availability of data and its requirements. GPT-3 requires a massive amount of high-quality data to operate effectively. This includes both structured and unstructured data in various forms, including text, images, and audio.

Here are some issues related to data availability and requirements for GPT-3:

- Access to high-quality training data is a challenge, especially for small businesses or startups with limited resources.

- Preparing data for GPT-3 training can be complex, time-consuming, and expensive.

- GPT-3 may not be able to generate accurate results if the input data is biased or incomplete.

- Scaling up data requirements for GPT-3 could be cost-prohibitive for some businesses, leading to a lack of innovation in the field.

Adapting to these challenges is crucial for companies looking to implement GPT-3 effectively and reap the benefits of this technology.

Ethical Considerations and Risks Associated with GPT-3

GPT-3 (Generative Pre-trained Transformer) has sparked a great deal of excitement about its potential applications in a wide range of fields. However, there are also certain ethical considerations and risks associated with the introduction of such a powerful text-generating system.

This article will look at some of the potential ethical and risk considerations of GPT-3 and suggest ways of mitigating them.

Bias in Models

Bias in models is a growing concern in artificial intelligence, particularly with the emergence of powerful language generation models like GPT-3. The ethical considerations and risks associated with these models are numerous and can have far-reaching consequences.

GPT-3, for example, has been shown to reproduce and amplify biases in its training data. This can lead to discriminatory outputs, which can be particularly problematic in applications like hiring, loan approvals, and other areas that rely on machine learning algorithms.

Additionally, GPT-3 presents challenges in terms of understanding and controlling its outputs. The model has been shown to produce fictional outputs that can be mistaken for real-world information. This can create significant risks when the model is used to generate medical or legal advice, or in other contexts where accuracy is crucial.

The risks associated with bias in models are significant and require careful consideration to ensure that artificial intelligence is used in a responsible and ethical manner. By acknowledging and addressing these challenges, we can work to create models that are fair, transparent, and beneficial to society.

Privacy and Security Concerns

With the advent of GPT-3, concerns have risen about risks associated with privacy and security. These concerns pertain to the ethical considerations of artificial intelligence and the potential for data breaches.

GPT-3 uses machine learning algorithms to analyze large datasets, essentially learning from millions of documents and producing human-like text. Privacy concerns arise due to the access of user data by developers, who need to offer access to their users’ data to optimize the model. Security issues center on potential malicious uses of the technology, including phishing attacks and impersonation.

Given the power of GPT-3, it is important to address these concerns and take proactive measures to mitigate the risks associated with it.

Pro Tip: When using GPT-3, secure your data by following data encryption standards and regularly monitoring for any security incidents. Always assess the ethical implications of using this type of technology and consider the potential risks before implementing it in your business.

Implications for Jobs and Industries

GPT-3’s capabilities may have significant implications for jobs and industries. While it has the ability to automate many mundane, repetitive tasks and enhance productivity, it may also displace human labor in some industries.

Ethical consideration and risks associated with GPT-3:

GPT-3’s deep learning capabilities raise questions about ethical considerations, data privacy, and bias in decision-making processes. Its ability to generate human-like text could make it difficult to distinguish between artificially generated content and human-authored content, leading to potential misuse, fake news, and increased concerns about data privacy.

The challenges of GPT-3:

Despite its potential benefits, GPT-3 faces several technical challenges, including scalability, interpretability, and robustness. The language generated by GPT-3 lacks consistency, and the model may occasionally produce nonsensical or offensive content. These challenges must be addressed before GPT-3 can be fully integrated into various industries.

Pro tip: While GPT-3 presents opportunities for innovation and growth, it is essential to address the ethical implications and technical limitations associated with its use to create a sustainable and inclusive future.

Tips for Successful Implementation of GPT-3

GPT-3 has recently become part of the conversation when it comes to artificial intelligence, machine learning, and natural language processing. With its ability to generate human-like text and generate answers to questions based on context, GPT-3 has become a revolutionary technology.

However, implementation of GPT-3 comes with its own challenges. In this article, we will explore some tips for successful implementation of GPT-3.

Identify Clear Use Cases

Implementing GPT-3 effectively requires a thorough understanding of its potential use cases, as well as an awareness of the challenges that may arise during its integration.

Here are tips for identifying clear use cases for GPT-3 and ensuring successful implementation:

1. Define specific business problems or goals that GPT-3 could help solve or achieve.

2. Identify potential use cases by evaluating GPT-3’s capabilities and limitations, including its strengths in natural language processing and its weaknesses in domain-specific knowledge.

3. Consider the resources and infrastructure needed to support GPT-3 integration, including data storage and processing requirements and potential privacy concerns.

4. Develop a clear project plan and timeline, including milestones, deliverables, and performance metrics, to ensure successful implementation and ongoing evaluation.

By following these tips, businesses can effectively leverage GPT-3 technology to improve their operations and outcomes.

Ensure Adequate Training Data

One of the biggest challenges of implementing GPT-3 successfully is to ensure adequate training data.

Here are some tips for overcoming this challenge:

1. Identify your specific use case and the type of data you need to train your model.

2. Collect as much relevant data as possible, ensuring that it is a representative sample of your intended application.

3. Clean and preprocess the data to remove any irrelevant or repetitive information and to ensure that it is in a format suitable for training a language model.

4. Augment your training data by using techniques such as data synthesis and data labeling to increase the quantity and quality of your data.

5. Continuously evaluate and retrain your model using new data to improve its performance and handle diverse scenarios.

By following these tips, you can increase the accuracy and effectiveness of your GPT-3 implementation.

Monitor and Adjust Performance Regularly

Monitoring and adjusting the performance of GPT-3 regularly is crucial for successfully implementing this powerful language model.

Here are some tips to help you manage and optimize GPT-3:

1. Set clear goals for your GPT-3 project and define metrics for measuring success.

2. Continuously track and analyze the system’s performance against your goals and metrics.

3. Identify and address performance bottlenecks proactively.

4. Fine-tune the system by adjusting parameters, experimenting with different configurations, and adding more training data.

5. Regularly evaluate the system’s outputs for quality and accuracy.

By constantly monitoring and adjusting performance, you can improve the accuracy and effectiveness of your GPT-3-powered applications and ensure you are getting the most out of this cutting-edge technology.